I have always been a firm believer that there is no such thing as a dumb question. Many times I have outed myself as the dumbest guy in this room with a simple question, and in the process, I hope I have been able to enlighten others.

“What is the difference between an API and a microservice?”

I was recently asked this question by a senior banking executive. She went on to explain that some of her stakeholders were struggling with the difference, and asked if MuleSoft could help. Part of my job as a Client Architect is that of a translator––to help business folks make sense of technology mumbo jumbo. I need to help people understand not just what this tech jargon means, but how it might bring value to their business. So naturally, I was up to the task. Answering this question also helped place Anypoint Platform in this overall context––a context worthy of sharing in this post.

What are microservices?

At MuleSoft, we define microservices as an architectural pattern for creating applications. Under this pattern, applications are structured as a collection of loosely coupled services. This is distinct from traditional applications, or monoliths, that are structured as single self-contained artifacts. Think of a microservice-based application as one built from Lego bricks, and a monolith as one built from a single slab of concrete.

In its purest form, an individual microservice encapsulates some atomic business function, such as “CreateOrder.” This function could be implemented using any programming language (.NET, Java, Node.js). Individual microservices can be re-used across different areas of the enterprise, or even externally by partners and customers.

A full microservices architecture can potentially encompass thousands of individual microservices. In order to support the management of a large number of microservices, a fully-formed microservices architecture will require support from other technologies and processes. This includes cross-cutting services, APIs, CI/CD, and containerization––technologies that are all considered integral to a microservices architecture.

Benefits of microservices

The primary benefit of microservices is that they have the potential to increase agility and productivity for implementing enterprises. New business services are developed through a combination of new microservices and the reuse of existing microservices. The resulting new service can be rapidly deployed and tested in an automated cycle that will speed time to market for new business initiatives.

Rapid deployment means that business stakeholders can verify in real-time that an application meets its intended business objective. Once business objectives have been verified, then security, resiliency, and scalability can be dynamically configured at the microservice-level. This encourages operational flexibility and infrastructure optimization.

Challenges of microservices

Whilst microservices offer the potential to speed delivery of business initiatives, they also present challenges. The complexity of a large-scale microservices architecture cannot be overstated. If microservices are not established and managed correctly, they can lead to slower delivery speeds and poor operational performance.

In developer-led enterprises these challenges can manifest in the need for teams to spend time building cross-cutting supporting services. The tendency can be to look toward rolling-your-own cross-cutting services on-top of a PaaS. PaaS vendors, such as AWS and Azure, provide discrete components of a microservices architecture such as API management, message-oriented middleware, and service directories. These require developers to wire components together to form the foundational services of their microservices architecture. This effort diverts teams from building business services to building supporting services instead.

Enter Anypoint Platform

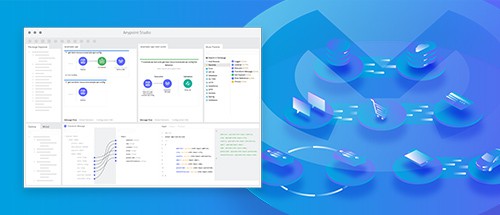

Bootstrapping a microservices architecture requires addressing the challenges of scale outlined above. This is where Anypoint Platform fits in by providing a solution and a suite of services to allow developers to build, manage, and reuse microservices.

The role of APIs in microservices

APIs are standardized wrappers that create an interface through which microservices can be packaged and surfaced. This makes APIs the logical enforcement point for key concerns of a microservices architecture such as security, governance, and reuse. Because APIs are able to house these concerns, they are considered a foundational component of a microservices architecture.

How Anypoint Platform fits in

MuleSoft has built on this concept of the API as a foundational component by developing Anypoint Platform, a solution that enables the development and delivery of the API layer that describes and enriches enterprise microservices. Anypoint Platform provides critical cross-cutting services to these microservices, including security, governance, and reuse.

Additionally, Anypoint Platform can be used to implement full stack microservices, often referred to as integration microservices. Integration microservices combine orchestration between business microservices with connectivity to legacy assets. Integration microservices form a connective tissue across a microservices architecture.

Go forth and conquer the world of microservices

Microservices represent the next frontier in application development––allowing businesses to rapidly deliver new initiatives. Key to this is the provisioning of a pre-packaged suite of services that allow for the implementation of APIs and a framework for the management of large-scale microservices architectures. MuleSoft provides such a solution – Anypoint Platform – that enables enterprises to embrace microservices today.

Learn more about best practices for microservices and microservices patterns.

Source: https://blogs.mulesoft.com/biz/microservices-biz/microservices-versus-apis/

Recent Comments